Un article pour vous montrer comment corriger et/ou supprimer les objets inaccessibles dans un cluster vSAN. Ce genre de problèmes arrivent lorsqu’on maltraite son cluster vSAN 🙁 mais étant en LAB rien de bien grave, mais il est important d’être vigilant si vous êtes en environnement de production pour ne pas reproduire ce genre de problématiques qui peuvent causer beaucoup de dégâts comme la perte de fichiers ou des VM qui passent en “inaccessible”.

Dans mon cas, le problème est apparu lors d’un changement de policy de stockage vSAN et en même temps j’ai éteint de façon très “sauvage” un de mes ESXi (pendant une longue durée) sans au préalable le mettre en mode maintenance….

La correction / suppression se fera en 4 étapes

- étape 1 : Scan complet du cluster à la recherche des “inaccessible vSAN objects”

- étape 2 : Purge des “inaccessible vswp objects”

- étape 3 : Nouveau scan complet du cluster

- étape 4 : Suppression des objets restants

étape 1

Se connecter en SSH sur le vCenter et lancer une console RVCVMware vCenter Server Appliance 6.7.0.20000

Type: vCenter Server with an embedded Platform Services Controller

Connected to service

* List APIs: "help api list"

* List Plugins: "help pi list"

* Launch BASH: "shell"

Command> shell

Shell access is granted to root

root@vcsa01a [ ~ ]# rvc [email protected]@localhost

- Une fois le mot de passe renseigné vous devriez avoir :

0 / 1 localhost/ >

- Dans RVC on se balade dans l’inventaire du vCenter avec les commandes “ls” et “cd“

cd localhost/LABDC/computers/

- Faire “ls” pour afficher les clusters disponibles

/localhost/LABDC/computers> ls 0 CL-MGMT01 (cluster): 1 CL-CMPT01 (cluster): 2 vsanwitness01.mchelgham.lab (standalone):Lancez un check avec la commande vsan.check_state suivie du nom du cluster vSAN

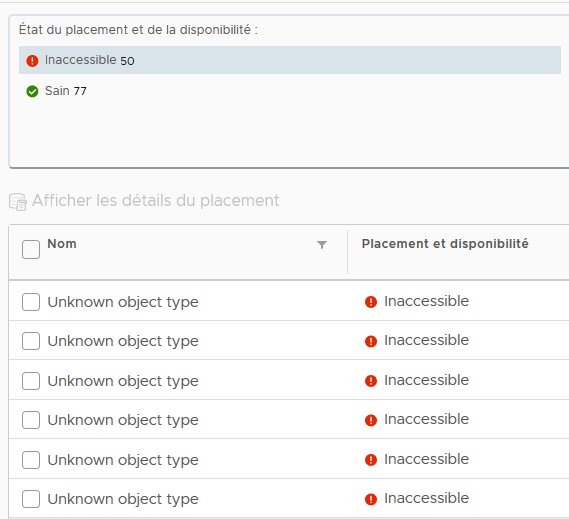

/localhost/LABDC/computers> vsan.check_state CL-CMPT01Vous devriez avoir un retour semblable à celui en dessous (Dans mon cas : Detected 50 objects to be inaccessible)

2019-03-10 13:34:26 +0000: Step 1: Check for inaccessible vSAN objects Detected 50 objects to be inaccessible Detected 2be3725c-2835-e60a-9119-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 27e5725c-4328-d10b-8569-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2be5725c-0584-c514-2d93-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2ee3725c-a470-be15-7859-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected bee2725c-3ef0-9518-722e-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 86e4725c-bdb4-bb1b-3451-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 8ee3725c-2fb8-021f-3b30-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 58e3725c-5c1b-8d24-b062-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected ebe3725c-a0ea-7b27-a09a-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 00e4725c-595f-072c-e42d-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 4fe4725c-830d-a12d-38df-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected eae3725c-f197-d52f-d5ef-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 24e3725c-3008-8e31-7dbc-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 51e4725c-03bf-0338-59ea-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 34e3725c-cdf5-5a3d-e913-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 2ee5725c-300e-3840-876d-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 6ed6725c-bc05-cc40-952e-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2ce5725c-8d9d-d74c-15af-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected ece3725c-74d6-5e5e-b7be-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2ae5725c-1b47-1060-3eda-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected d0e3725c-6163-9762-76d1-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 45b6725c-73f9-bb63-2d7c-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 3ae3725c-b351-d364-e248-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected dd0e735c-7a95-2967-08a4-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected ce0e735c-5e7d-ed6d-1e80-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected f0e3725c-0b79-3b70-30e6-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 51e4725c-f4d2-0d71-c9a3-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 4fe4725c-5d13-bb72-c2ec-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected c0e2725c-3dd0-f478-faac-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected ce0e735c-f4e5-2c7b-29f2-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected eae3725c-da8e-1c82-ced6-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2be5725c-e219-cb9b-7385-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 3fe3725c-2984-3e9c-9388-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 010f735c-5c18-57a7-7c33-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 51e4725c-b17f-3fab-9c89-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 920e735c-c57e-9ab6-d9a8-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 50e4725c-5926-01b7-6675-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 800e735c-3303-7cba-b842-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 400e735c-b39d-eabe-ce92-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected ebe3725c-7a77-debf-c7a0-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 7e0e735c-1c84-42cc-3e73-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected d1e4725c-dd14-f5d4-9b30-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2be5725c-1764-89d7-5c0f-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected f3e3725c-5080-a0de-b23b-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 52e4725c-0ec6-c0e3-162e-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 1ee3725c-6e37-bfe7-aa60-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected ebe3725c-5564-15f3-8eb4-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 50e4725c-1565-bdf6-61ad-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 95e3725c-7438-28f7-c75c-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2fe5725c-2603-09fe-5d0d-002655db0344 on esxi01a.mchelgham.lab to be inaccessible 2019-03-10 13:34:26 +0000: Step 2: Check for invalid/inaccessible VMs 2019-03-10 13:34:26 +0000: Step 3: Check for VMs for which VC/hostd/vmx are out of sync Did not find VMs for which VC/hostd/vmx are out of sync

étape 2

Nous allons maintenant purger les objets vswp qui sont périmés et plus accessibles avec la commande vsan.purge_inaccessible_vswp_objects suivie du nom du cluster.

/localhost/LABDC/computers> vsan.purge_inaccessible_vswp_objects CL-CMPT01Vous devriez avoir (Dans mon cas : Found 9 inaccessible vswp objects.)

2019-03-10 13:37:27 +0000: Collecting all inaccessible vSAN objects... 2019-03-10 13:37:27 +0000: Found 50 inaccessbile objects. 2019-03-10 13:37:27 +0000: Selecting vswp objects from inaccessible objects by checking their extended attributes... 2019-03-10 13:37:31 +0000: Found 9 inaccessible vswp objects. +--------------------------------------+---------------------------------------------------------------------------------------------------------------------------+-------------------------+ | Object UUID | Object Path | Size | +--------------------------------------+---------------------------------------------------------------------------------------------------------------------------+-------------------------+ | 45b6725c-73f9-bb63-2d7c-002655db0344 | /vmfs/volumes/vsan:52a302c1e2dd0b4d-e21261438178731a/0aa4695c-fa0f-3269-dbe1-002655db0344/HCIBench_1.6.8.7-46a0a40e.vswp | 8589934592B (8.00 GB) | | dd0e735c-7a95-2967-08a4-002655db0344 | /vmfs/volumes/vsan:52a302c1e2dd0b4d-e21261438178731a/d351465c-a762-6d0b-2998-002655db0344/labexpert-vra-a629883b.vswp | 12884901888B (12.00 GB) | | ce0e735c-5e7d-ed6d-1e80-002655db0344 | /vmfs/volumes/vsan:52a302c1e2dd0b4d-e21261438178731a/ab7a475c-b68b-1060-a376-002655db0344/labexpert-iaas-fc7d59ec.vswp | 4294967296B (4.00 GB) | | ce0e735c-f4e5-2c7b-29f2-002655db0344 | /vmfs/volumes/vsan:52a302c1e2dd0b4d-e21261438178731a/0a8e475c-f287-bbab-917d-002655db0344/labvra-sql-3ea253f8.vswp | 4294967296B (4.00 GB) | | 010f735c-5c18-57a7-7c33-002655db0344 | /vmfs/volumes/vsan:52a302c1e2dd0b4d-e21261438178731a/54c7375c-2ede-47cf-43d1-002655db0344/NSXMGMT01-d0ea65a9.vswp | 17179869184B (16.00 GB) | | 920e735c-c57e-9ab6-d9a8-002655db0344 | /vmfs/volumes/vsan:52a302c1e2dd0b4d-e21261438178731a/00c7375c-d2fc-ad52-590f-002655daa884/labaxians-win7-a39e16b8.vswp | 4294967296B (4.00 GB) | | 800e735c-3303-7cba-b842-002655db0344 | /vmfs/volumes/vsan:52a302c1e2dd0b4d-e21261438178731a/fbc6375c-d1d5-22ab-8c0f-002655daa884/labaxians-vesxi01-3a430f56.vswp | 21474836480B (20.00 GB) | | 400e735c-b39d-eabe-ce92-002655db0344 | /vmfs/volumes/vsan:52a302c1e2dd0b4d-e21261438178731a/01c7375c-0aeb-dbf4-222e-002655daa884/labaxians-vcsa67-4207c99d.vswp | 10737418240B (10.00 GB) | | 7e0e735c-1c84-42cc-3e73-002655daa884 | /vmfs/volumes/vsan:52a302c1e2dd0b4d-e21261438178731a/6de4495c-9043-5331-763d-002655daa884/labexpert-ad-88b91a45.vswp | 2147483648B (2.00 GB) | +--------------------------------------+---------------------------------------------------------------------------------------------------------------------------+-------------------------+ 2019-03-10 13:37:31 +0000: Ready to delete the inaccessible vswp object... VM vswp file is used for memory swapping for running VMs by ESX. In VMware virtual SAN a vswp file is stored as a separate virtual SAN object. When a vswp object goes inaccessible, memory swapping will not be possible and the VM may crash when next time ESX tries to swap the memory for the VM. Deleting the inaccessible vswp object will not make thing worse, but it will eliminate the possibility for the object to regain accessibility in future time if this is just a temporary issue (e.g. due to network failure or planned maintenance). Due to a known issue in vSphere 5.5, it is possible for vSAN to have done incomplete deletions of vswp objects. In such cases, the majority of components of such objects were deleted while a minority of components were left unavailable (e.g. due to one host being temporarily down at the time of deletion). It is then possible for the minority to resurface and present itself as an inaccessible object because a minority can never gain quorum. Such objects waste space and cause issues for any operations involving data evacuations from hosts or disks. This command employs heuristics to detect this kind of left-over vswp objects in order to delete them. It will not cause data loss by deleting the vswp object. The vswp object will be regenerated when the VM is powered on next time. Delete /vmfs/volumes/vsan:52a302c1e2dd0b4d-e21261438178731a/0aa4695c-fa0f-3269-dbe1-002655db0344/HCIBench_1.6.8.7-46a0a40e.vswp (45b6725c-73f9-bb63-2d7c-002655db0344)? [Y] Yes [N] No [A] yes to All [C] Cancel to all:A la dernière question, tapez A pour confirmer.

[Y] Yes [N] No [A] yes to All [C] Cancel to all: A

étape 3

On relance un scan pour vérifier que le nombre d’objets inaccessibles a diminué (Dans mon cas : Detected 41 objects to be inaccessible).

/localhost/LABDC/computers> vsan.check_state CL-CMPT01 2019-03-10 13:45:27 +0000: Step 1: Check for inaccessible vSAN objects Detected 41 objects to be inaccessible Detected 2be3725c-2835-e60a-9119-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 27e5725c-4328-d10b-8569-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2be5725c-0584-c514-2d93-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2ee3725c-a470-be15-7859-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected bee2725c-3ef0-9518-722e-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 86e4725c-bdb4-bb1b-3451-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 8ee3725c-2fb8-021f-3b30-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 58e3725c-5c1b-8d24-b062-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected ebe3725c-a0ea-7b27-a09a-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 00e4725c-595f-072c-e42d-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 4fe4725c-830d-a12d-38df-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected eae3725c-f197-d52f-d5ef-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 24e3725c-3008-8e31-7dbc-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 51e4725c-03bf-0338-59ea-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 34e3725c-cdf5-5a3d-e913-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 2ee5725c-300e-3840-876d-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 6ed6725c-bc05-cc40-952e-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2ce5725c-8d9d-d74c-15af-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected ece3725c-74d6-5e5e-b7be-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2ae5725c-1b47-1060-3eda-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected d0e3725c-6163-9762-76d1-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 3ae3725c-b351-d364-e248-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected f0e3725c-0b79-3b70-30e6-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 51e4725c-f4d2-0d71-c9a3-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 4fe4725c-5d13-bb72-c2ec-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected c0e2725c-3dd0-f478-faac-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected eae3725c-da8e-1c82-ced6-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2be5725c-e219-cb9b-7385-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 3fe3725c-2984-3e9c-9388-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 51e4725c-b17f-3fab-9c89-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 50e4725c-5926-01b7-6675-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected ebe3725c-7a77-debf-c7a0-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected d1e4725c-dd14-f5d4-9b30-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2be5725c-1764-89d7-5c0f-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected f3e3725c-5080-a0de-b23b-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 52e4725c-0ec6-c0e3-162e-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 1ee3725c-6e37-bfe7-aa60-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected ebe3725c-5564-15f3-8eb4-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 50e4725c-1565-bdf6-61ad-002655daa884 on esxi01a.mchelgham.lab to be inaccessible Detected 95e3725c-7438-28f7-c75c-002655db0344 on esxi01a.mchelgham.lab to be inaccessible Detected 2fe5725c-2603-09fe-5d0d-002655db0344 on esxi01a.mchelgham.lab to be inaccessible 2019-03-10 13:45:27 +0000: Step 2: Check for invalid/inaccessible VMs 2019-03-10 13:45:27 +0000: Step 3: Check for VMs for which VC/hostd/vmx are out of sync Did not find VMs for which VC/hostd/vmx are out of sync

Comme vous pouvez le voir tous les objets se trouvent dans le même ESXi qui est le esxi01a

étape 4

Dans cette étape, nous allons dans un premier temps nous connecter en ssh sur l’esxi qui contient les objets inaccessibles.

ssh [email protected]

Ensuite nous allons supprimer les objets 1 par 1 avec la commande ci dessous

/usr/lib/vmware/osfs/bin/objtool delete -u UUID -f -v 10

Vous devriez remplacer UUID par celui de votre objet

/usr/lib/vmware/osfs/bin/objtool delete -u 2be5725c-0584-c514-2d93-002655db0344 -f -v 10

Faire de même pour tous vos UUID inaccessibles…

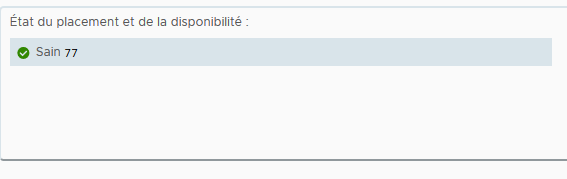

Une fois terminé, refaire un scan du cluster avec la commande vsan.check_state suivie du nom du cluster et vous devriez avoir

/localhost/LABDC/computers> vsan.check_state CL-CMPT01 2019-03-10 14:08:22 +0000: Step 1: Check for inaccessible vSAN objects Detected 0 objects to be inaccessible 2019-03-10 14:08:22 +0000: Step 2: Check for invalid/inaccessible VMs 2019-03-10 14:08:22 +0000: Step 3: Check for VMs for which VC/hostd/vmx are out of sync Did not find VMs for which VC/hostd/vmx are out of syncVoila c’est terminé votre cluster et maintenant Sain